Backgroud

In my opinion, SonarQube is not a very easy setup DevOps tool to compare with Jenkins, Artifactory. You can’t just run some script under the bin folder to let the server boot up.

You must have an installed database, configuration LDAP in the config file, etc.

So I’d like to document some important steps for myself, like setup LDAP or PostgreSQL when I install SonarQube of v9.0.1. It would be better if it can help others.

Prerequisite and Download

Need to be installed JRE/JDK 11 on the running machine.

Here is the prerequisites overview: https://docs.sonarqube.org/latest/requirements/requirements/

Download SonarQube: https://www.sonarqube.org/downloads/

cd sonarqube/

ls

wget https://binaries.sonarsource.com/Distribution/sonarqube/sonarqube-9.0.1.46107.zip

unzip sonarqube-9.0.1.46107.zip

cd sonarqube-9.0.1.46107/bin/linux-x86-64

sh sonar.sh console

Change Java version

I installed SonarQube on CentOS 7 machine, the Java version is OpenJDK 1.8.0_242 by default, but the prerequisite shows at least need JDK 11. There is also JDK 11 available on my machine, so I just need to change the Java version.

I recommend using the alternatives command change Java version,refer as following:

$ java -version |

Install Database

SonarQube needs you to have installed a database. It supports several database engines, like Microsoft SQL Server, Oracle, and PostgreSQL. Since PostgreSQL is open source, light, and easy to install, so I choose PostgreSQL as its database.

How to download and install PostgreSQL please see this page: https://www.postgresql.org/download/linux/redhat/

Troubleshooting

1. How to establish a connection with SonarQube and PostgreSQL

Please refer to the sonar.properties file at the end of this post.

2. How to setup LDAP for users to log in

sonar.security.realm=LDAP |

3. How to fix LDAP login SonarQube is very slowly

Comment out ldap.followReferrals=false in sonar.properties file would be help.

Related post: https://community.sonarsource.com/t/ldap-login-takes-2-minutes-the-first-time/1573/7

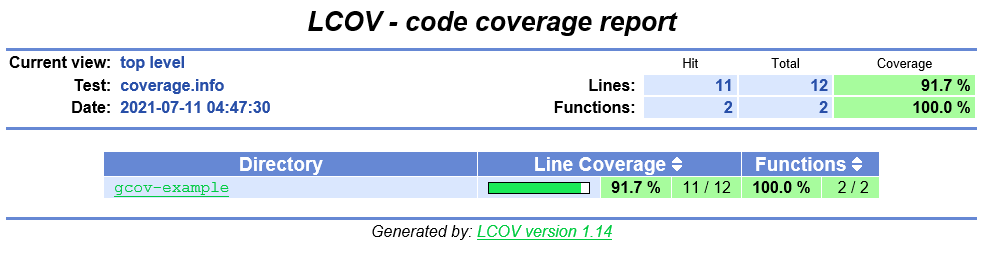

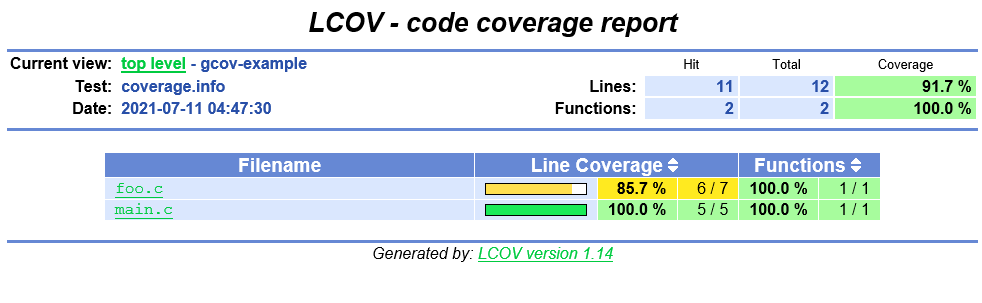

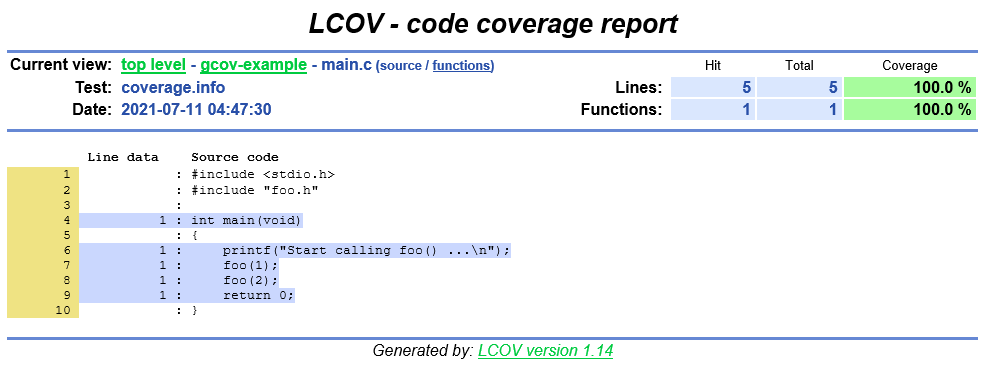

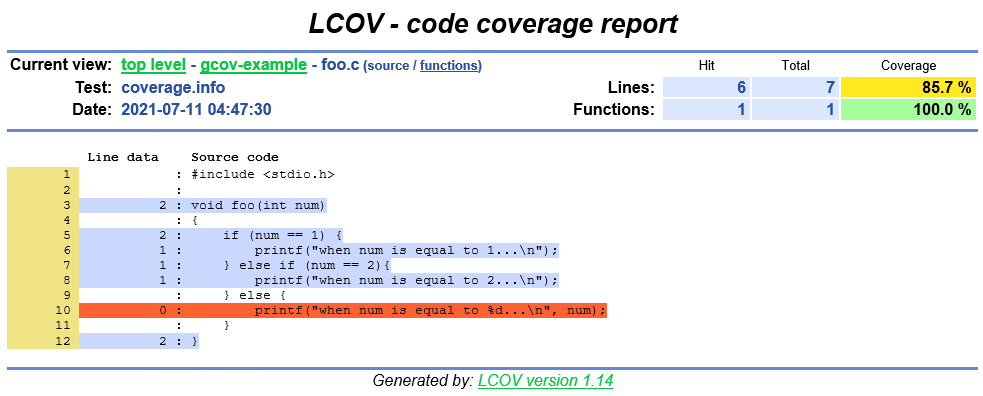

4. How to fix ‘Could not resolve 11 file paths in lcov.info’

I want to display Javascript code coverage result in SonarQube, so I added sonar.javascript.lcov.reportPaths=coverage/lcov.info to the sonar-project.properties

But when I run sonar-scanner.bat in the command line, the code coverage result can not show in sonar. I noticed the following error from the output:

INFO: Analysing [C:\workspace\xvm-ide\client\coverage\lcov.info] |

There are some posts related to this problem, for example, https://github.com/kulshekhar/ts-jest/issues/542, but no one works in my case.

# here is an example error path in lcov.info |

Finally, I have to use the sed command to remove ..\ in front of the paths before running sonar-scanner.bat, then the problem was solved.

sed -i 's/\..\\//g' lcov.info |

Please comment if you can solve the problem with changing options in the tsconfig.json file.

4. How to output to more logs

To output more logs, change sonar.log.level=INFO to sonar.log.level=DEBUG in below.

Note: all above changes of

sonar.propertiesneed to restart the SonarQube instance to take effect.

Final sonar.properties

For the sonar.properties file, please see below or link